Written by Neuvik co-founder Tillery with Ryan Leirvik, CEO and founder of Neuvik.

Introduction

In a perfect world, we don’t need penetration testing. In a perfect world, we don’t need red team operations. In a perfect world, technology is perfect from design to release, and the only things left to find in production have no noticeable security impact.

But the world isn’t perfect, and technology can’t be perfect; where there is technology, there is risk associated with the use of that technology.

This is where concepts like DevSecOps and the “shift left” come into play – working together, we remove (through design) or mitigate (through intervention) imperfections as early as possible in the process.

This is also where professional training comes into play. Practitioner training should be a part of any well-functioning cybersecurity program – no matter how strong perceived technical controls are. Everyone has room to grow as we collectively make the world safer.

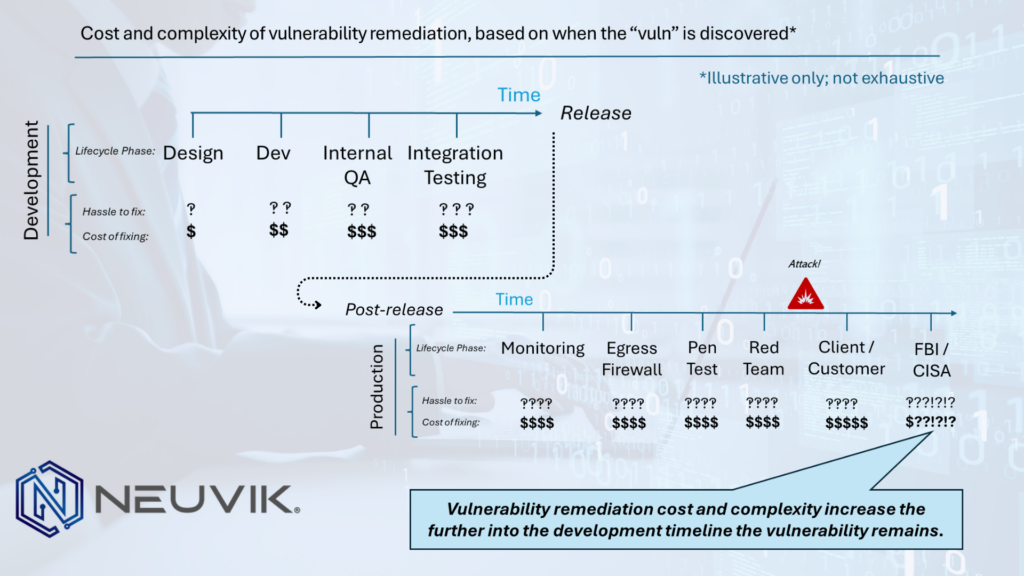

Why does this matter in product development? Well, let’s look at the timeline of a product launch.

How development timelines usually happen

Typically, development takes the focus of functionality over security. A developer is concerned with completing their deliverable within the project timeline, and only when it enters QA testing is it considered for vulnerabilities. Should any security vulnerabilities go unnoticed, those vulnerabilities then enter production – where they may be discovered through monitoring, external/internal testing, or unfortunately, criminal exploitation.

Vulnerabilities are introduced from design, implementation, or both. These occur prior to release and execution. But those vulnerabilities are realized in production. So our platonic ideal of security catches design and implementation flaws early, for removal or mitigation. If that is missed, flaws are introduced, and these flaws may be found through manual testing, or QA, or automated tooling like SAST/DAST/IAST. If flaws are found, we go back to the drawing board and find a way to fix them. More time. More cost. Less cool new problems solved while we fix our solutions to the last problem. But at least they haven’t made it to production.

Unless we miss them there, too! Now, we’re on the operational side of the timeline. We’re relying on externalities and post hoc identification through technology, personnel, or both.

Development is iterative and cyclical. Issues realized later mean reapproaching problems we thought were solved. In our perfect world, a maximum amount of impact is created with a minimum amount of time. In the real world, issues creep in. They’re found late. They require more and more time to solve. Their existence means impact – like lost work, fines and penalties, legal fees, incident response costs, human wellbeing, and even human safety. It means resources to identify, discover, alert, and act on them. It means damage from exploitation, or cost in third parties to find them, or both. It raises new, scary questions: How long has this vulnerability been here? How long has it gone on before being exploited? In the best of cases, the longer it has gone unnoticed, the more it costs you in people and money. In the worst cases, the more it costs you and your customers in damage — monetary and reputational.

So what? What do we do about this?

We take the perspective that “you may learn it.”

We are coming from a historic world where security was an afterthought and externality – that’s a problem, but we can solve it by putting the knowledge in the hands of the people for whom it does the most good.

Developers and architects learn what insecure means, and how to implement securely. Testers (whether developers or a separate group of people) learn how to test for security-impacting edge conditions, corner cases, program and system states. Everyone better understands underlying systems (both technical, such as architecture, and theoretical, such as algorithms) in order to better see subtle interactions. Better tooling gives us better insight into our operating environments. External testers learn about new technologies and how to test them.

Training, knowledge sharing, and upskilling by any name is the rising tide that raises all security ships; that also means security, and by extension learning, is everyone’s job. It’s better for the business, it’s better for the people, it’s better for the world.

There are so many knowledge domains at play here that no one can do them all, but we all come together and do some. Security is a gestalt game where we become better than the sum of our parts. That’s what the security community is about. And that means all of us need to have a baseline knowledge, and we all need to grow.

Practitioner training should be a part of any well-functioning cybersecurity program, and Neuvik continues to believe that training is the foundation to better, and more long-term, security. It is the answer that drives us away from the problems and toward our more perfect world.

For more on how issues can be found and intervened early, see the blog series “A Retrospective Vulnerability Case Study: Part 1 (The Red Teamer’s POV), Part 2 (The QA Tester’s POV), and Part 3 (The Developer’s POV), where we discuss identification of the same real-world vulnerability across the timeline.

About the Authors

Tillery

Tillery served as a founder of Neuvik as the Director of Professional Cybersecurity Training. Tillery has been in formal education and professional training roles for the US Department of Defense as well as for commercial companies for more than a decade. Tillery brings deep technical knowledge and pedagogical training to instruction in cybersecurity, computer science, and mathematics.

- LinkedIn: https://www.linkedin.com/in/aretillery/

- Twitter: https://x.com/AreTillery

Ryan Leirvik

Ryan is the Founder and CEO of Neuvik. He has over two decades of experience in offensive and defensive cyber operations, computer security, and executive communications. His expertise ranges from discovering technical exploits to preparing appropriate information security strategies to defend against them. Ryan is also the author of “Understand, Manage, and Measure Cyber Risk,” former lead of a R&D cybersecurity company, Chief of Staff and Associate Director of Cyber for the US Department of Defense at the Pentagon, cybersecurity strategist with McKinsey & Co, and technologist at IBM.

- LinkedIn: https://www.linkedin.com/in/leirvik/

- Twitter: https://x.com/Leirvik