In this month’s blog series, we’ve moved “backwards through time” using a 2017 T-Mobile case study to illustrate what can be learned from three roles discovering a vulnerability in an operational system at varying points in the development cycle.

Our previous two installments discussed what lessons can be learned from a red teamer’s discovery of that vulnerability, followed by exploration of what happens if a QA tester discovers it in the late stages of a software development lifecycle (SDLC). Through these two perspectives, we’ve highlighted something important: proof that finding and mitigating vulnerabilities early increases the overall security and reduces the productivity impact.

Which begs the question: how might a developer find a vulnerability during the design phase? This blog discusses how application threat modeling would assist a developer in doing just that.

Taking the insights gleaned from these three valuable perspectives to secure development allows us to better understand a holistic view of cybersecurity. The unique points of view, or perspectives, inform one another to strengthen overall cyber resilience in an organization, maturing the systems we rely upon.

In this series, using a 2017 web application T-Mobile example as a case study, we will examine these through:

- A red teamer’s point of view of a production analysis on a system

- A development-focused point of view examining the late stages of a SDLC with emphasis on Quality Assurance

- A holistic point of view of the design phase and application threat modeling

Through this method, we’ve started at the end and now continue to work our way backwards to show how a real-life case study that was found in production could have been found earlier.

This installment, we discuss that through a developer’s POV.

Why the Developer’s POV Matters:

Developers, particularly those designing a feature, have the most information and context about the engineering going into it — which can create the most complete understanding of security for that feature. When developers create with “secure-by-design” requirements in mind, they can reduce the need for mitigating security flaws externally.

The developer answers the question “what are our initial requirements and design” prior to development, inviting the opportunity to place security decisions first and foremost in the design phase.

Many practices contribute to secure-by-design, the most foundational and powerful of which is application threat modeling. Here, the developer looks at the actual engineering design from a security perspective, thus, “shifting left” to the very beginning of the development cycle (as opposed to the end of the development cycle) for greater impact on overall security.

Analysis — A Developer’s Journey

Imagine you are a developer tasked with implementing a new feature for T-Mobile’s web application.

Due to a recent uptick in findings during penetration testing, your organization has proactively chosen to adopt additional DevSecOps practices in order to find potential vulnerabilities prior to them being released into production. As part of the effort to “push left”, your organization has identified a need for reducing the number of vulnerabilities found in the production code.

To accomplish this, Security by Design has been introduced as a foundational part of development to help accomplish this. The first, and best, element of this design-time thinking is a push for threat modeling as a process to catch these security vulnerabilities before the code is even written.

Application threat modeling is a process through which a design is analyzed for threats or risks to security. This practice can occur as soon as the design exists, which means that implementation is not required. That allows for it to be completed in the earliest stages of the software development life cycle.

In this instance, you are given the business requirement to create a profile page that displays information about a user, including the lines on their account. A single account may have multiple lines associated with it, and the page should display information about each. Because the feature requirements did not clarify what information about which line should be displayed, you work with the product owner to clarify the requirements, determining the subset of line information to display.

Now that the requirements for this feature have been clarified, it’s time to move into feature design. You first model a page that queries the different types of information to be displayed, including but not limited to information about lines on the account. Once the initial design is done, you can perform threat modeling to determine if there are risks in the design that can, and should, be mitigated.

There are several threat modeling methodologies that can be employed. You chose Trike because:

- It focuses on architecture and data model correctness

- It emphasizes developer knowledge over assumed security expertise.

- As a “crown-jewel” based methodology, it focuses protection on data and data flow that is most in need of protection based on the organization’s priorities

- It is exhaustive in its analysis of threats due to the application of Hazardous Operations analysis

We’re now ready to employ Trike.

Step one: decompose the application.

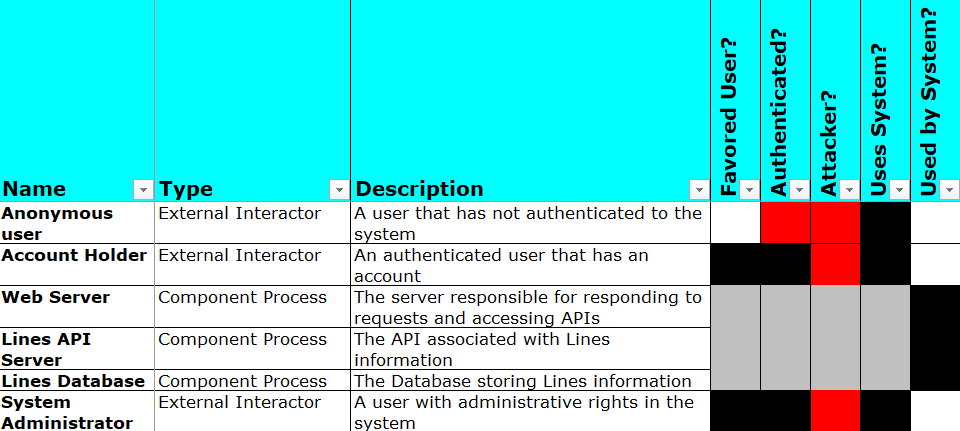

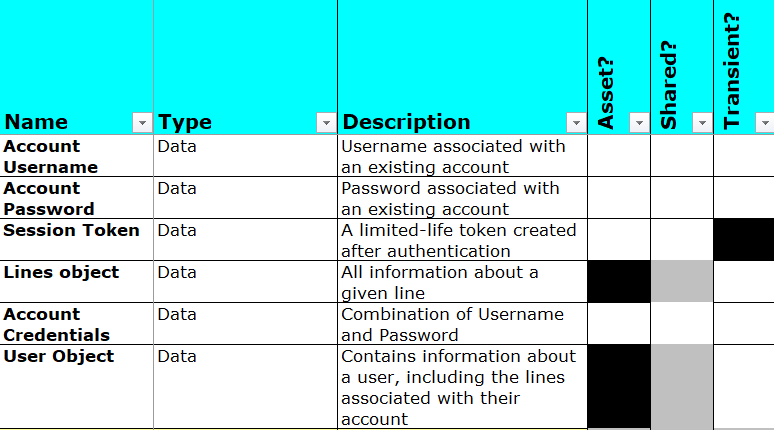

The first step is to decompose the application design into its composite actors and data. Here, the actors are any roles or users that use, or are a part of, the application and its associated system — whether human or machine. Data represents information received, stored, transmitted, or processed within the application or its associated system.

Once the Actors and Data Model have been identified, you would next identify a subset of each: the Favored Users and Assets. Favored Users are those external actors for whom the application is designed and who should have access to protected data, while Assets are data of sufficient value or criticality that they must be protected.

During the decomposition, you determine that the existing information about lines contains within it protected information, and that only a subset of this information is needed for the profile page itself. This allows you to do some quick redesign to minimize the data sent by the API to the browser: you modify the design of the API to add a new endpoint that returns only that subset of the lines’ information which is required for display on the profile page. Voila, you’ve removed an Excessive Data Exposure risk!

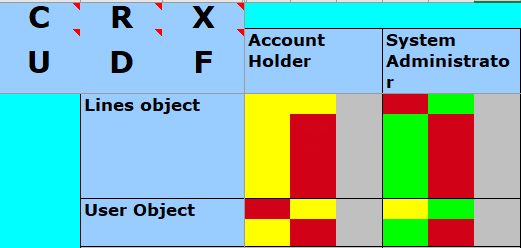

Step two: define intended actions.

After this, the model defines the intended actions — those interactions that should be allowed for each type of Favored User over each type of Asset. This identifies the foundational security requirements in terms of “should,” “should not,” and “should conditionally” rules over the data.

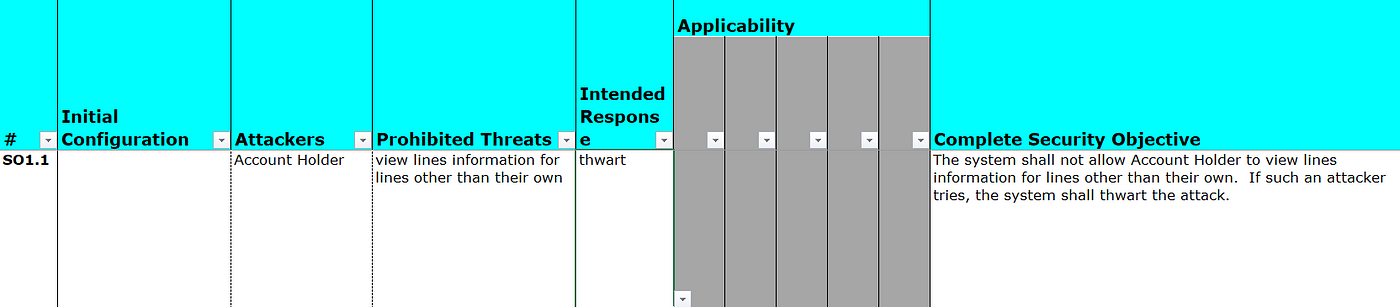

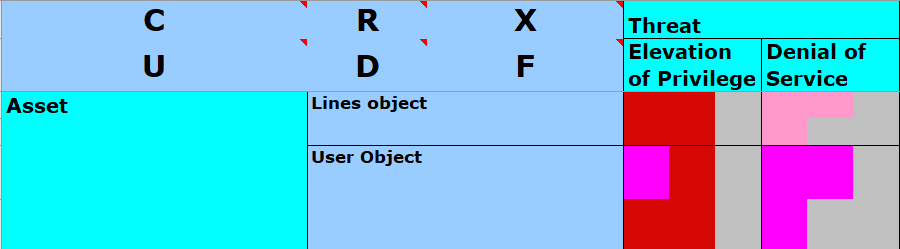

Step three: determine the criticality of unintended access and create a set of security objectives (“this role should not be able to do this thing, but if they can, here’s the step to prevent it”).

Once intended operation is defined, the inverse (the unintended) represents a violation of some definition for security. By determining the criticality of each unintended operation, Security Objectives — the rules by which actions and interactions will be judged — are formed.

Once these security objectives are defined, you can move on to the final step: defining, and analyzing, our step-by-step workflow. By designing the data and interaction model up front, and creating security requirements based on them, any subsequent application and workflow design can be compared against them in real time, making foundationally secure designs easier to create.

You start by entering the workflow as originally designed and determining if it violates any security objectives. It’s a good thing you identified the Excessive Data Exposure earlier, but you could also have identified it during this phase.

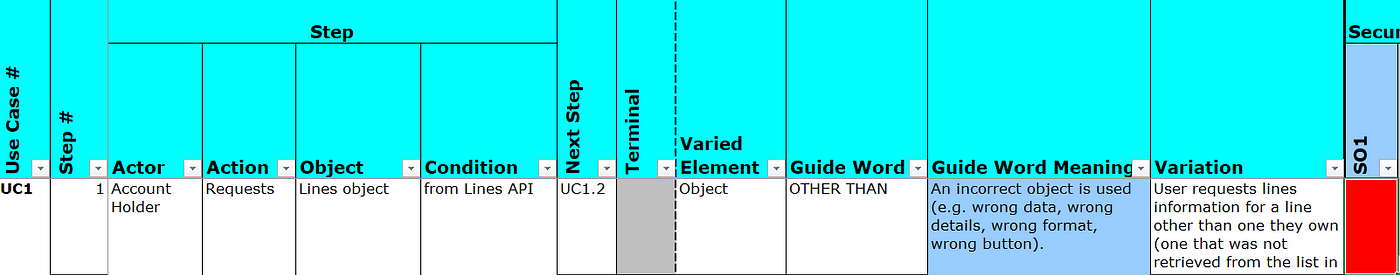

Now that you’ve analyzed normal application behavior, it’s time for the part that differentiates Trike from other threat modeling methodologies: Hazardous Operations Analysis (HazOp)!

HazOp is the process of applying variation to each step of the workflow you are creating. You will go through the process of varying each element of each step (actor, the object, the action, and the condition) in various ways (such as “more than”, “less than”, “other than”). If the resulting variant action would cause a violation of our Security Objectives, this is a problem! You need to modify your design to add protections which prevent that from occurring.

During one of these variations, the “object” “other than” condition, you see that if a user queries a line via the API that they do not own, they still receive a response. This is a violation of the Security Objectives stating that a user should have conditional access to lines information: it should be restricted to only their own lines. The fact that a Security Objective has been violated means you’ve found another vulnerability and need to adjust your design appropriately.

You modify the line information API call once again — this time, to add an object-level authorization step. Voila! You have removed a Broken Object Level Authorization issue — the one this case study focuses on.

Because you found this during our design phase, you never implemented the code that had the vulnerability, so it would never make it to production, or even to the testing phase. However, you now know to create tests to prevent this for future features, in case you miss it during the modeling of their designs. Now, it can never make it into production because it was fixed in the foundational design and will not be implemented, and you’ve created a new set of policies to help guide our development in the future.

The Power of “Security by Design” and “Shifting Left”

Over the course of this month, we’ve analyzed the same case study as we’ve traveled backwards through time in a development life cycle. During each post, we showed how this issue could have been found through security-focused activities, and during each post, we’ve seen that finding the issue earlier saves future work and aggravation.

Through threat modeling, we’ve discussed the power of security by design. Through the perspective of a QA tester, we’ve shown security’s role in functionality testing. Through the perspective of a red teamer, we’ve shown the importance of security interventions and testing even for released, supposedly functioning code, and with it the need for periodic auditing and review.

In other words, security does not belong to any one role. It is something collectively shared across an organization.

The earlier we can perform security activities and associated analyses, the better off an organization becomes from a security perspective, saving time and becoming more efficient in the process.