New Technology as a Source of Fraud and Risk

How do you initially react to each newly introduced advancement in technology?

As engineers and problem solvers, we typically think of advancements optimistically. We see them as exciting opportunities to help push forward improvements — because technology should make things better for people, whether through automating some boring process, optimizing some routine tasks, or allowing for new ways to solve hard problems, right?

Well it turns out attackers think this way, too: advancements in technology are opportunities… for them.

This should be nothing new. But what might be new is noting that attackers, when thinking about these opportunities, are most often NOT thinking about new outcomes, but rather new ways to achieve the same outcome they are already pursuing — like fraud.

With this thinking, emerging technologies represent NEW ways to pose OLD threats.

And if we think of them this way, then our defenses may remain focused on the same thing that we always (or should always) focus on — our most critical assets. Then, only our mechanisms need to adjust to new avenues of the same attacks.

If we’re already thinking about our problem this way — that is, what we need to defend — then we’re already well on our way to defending against what’s heading our way in terms of new technologies used for attacks.

Problems of Identity & Access

For the purpose of this article, we’ll focus on problems of identity and access through the lens of banking and financial fraud.

First, a quick level-set on fraud. Fraud is a problem relating to Identity and Access.

Identity poses the question, “Is the person or representative who they say they are?”

Access determines, “Can this user, process, or system interact with or utilize this asset?”

To illustrate how emerging technologies may impact the space of fraud, we’ll focus on two that are used to pose old threats right now and in the future: “deep fake” technologies and quantum computing.

We will provide a basic overview of how “deep fake” technologies can be used for identity-related fraud, and how quantum computing can enable data access to unauthorized users.

Case Study #1: “Deep Fake” Technologies & Identity

Let’s start with identity — is the person or representative who they say they are?

It’s likely that you (the reader) have heard about AI-generated “deep fakes.” When we think about them, we typically think about video, such as the 2018 Jordan Peele and President Obama video. But video is just image and audio. And in the world of banking and finance fraud, we mostly deal with audio.

A subset of “deep fake” technologies is the generation of realistic audio — or more specifically, realistic voices, including inflections and mannerisms. The math and neural-network algorithms behind these are fascinating, but we won’t get into that in here.

What is interesting for us as banking and financial transaction defenders is the impact that these technologies have — not how they are generated.

For identity purposes, a combination of a realistic voice (something you are) and personal information (something you know) is enough to convince someone you are who you say you are… even if you are not.

How “Deep Fakes” Promote Identity-Related Fraud

To test this, a Neuvik researcher created an AI-generated voice¹ with the goal of completing a successful customer-banking transaction².

Let’s see how it went:

No fraud was committed during this experiment. Permission from the authorized account holder was provided and properly supervised to complete this transaction. The content of the audio was not edited. The audio length was edited for brevity — to keep approx. 2 min in length.

What did you hear? A successful customer-banking transaction.

Yet, the “customer” in this scenario was not a human, but rather AI-generated audio. Despite not being a human-to-human interaction, however, this audio passed identity screening.

How did this work?

Creating the “deep fake” took our researcher eight hours to complete, cost roughly USD$11, and the researcher involved had no prior background in deep fakes. This is the equivalent of one business day and one cup of coffee (depending on where you live!).

For someone with knowledge of tools used in this space, this process would be faster (and potentially cheaper) to perform.

What was used?

Publicly available information was gathered using Open-Source Intelligence (OSINT) and was used to answer questions as posed by the financial institution. The artificial voice was created (that is, “trained”) using existing audio published to YouTube — something anyone attempting to replicate a voice would have access to.

What was the hardest part?

The hardest part of this entire process was getting permission from the user to create the “deep fake.”

This is something an attacker would not bother to do.

How did it all come together?

By combining these elements of identity, we were able to place a call to the user’s financial institution, pose as them in a realistic enough way to pass identity screening, and close an account, including having the funds mailed to an entirely new address.

Interestingly, it turns out the other end of this call was also being computer-generated. Conversations with the Credit Union managers after the call revealed that calls placed on the weekend are outsourced to an off-shore call center that itself uses artificial voices to sound “American” — so we had two computer voices, each controlled by a human, talking to each other!

This is only one example of multiple ways “deep fake” technology might be used for fraud. Anywhere that a malicious actor might want to take on their identity using audio, they could employ this strategy.

How do we defend against this?

Luckily, defending against these sorts of attacks doesn’t require some new future technology or millions in investment — it requires the same steps already being taken to defend against social engineering and account fraud.

Following change management processes without falling victim to “calls to emotion” go most of the way. Implement additional “challenge and response” mechanisms (like pre-shared passwords, or something only a real user might know) in important workflows.

In our example above, the call center allowed us to bypass some important protections: calling from a known number and sending an OTP. Both would have created hurdles for the attacker, but these could also be targeted by SIM swaps and other attacks.

Adding additional secrets like PINs or passwords, or out-of-band confirmation via email or phone calls, would add another layer of protection. These probably sound familiar — they’re defenses that are already put in place for change management and fraud.

Now that we’ve addressed one example of a fake “identity” problem, let’s turn our attention to an unauthorized “access” problem.

Case Study #2: Quantum Computing & Access

One way that unauthorized access is prevented is through data encryption: the translation of usable readable data (aka “plain text”) into a form not readily usable as data (like encryption), but usable when reversed (that is, the original data may be retrieved if you know the process and keys). If data is encrypted using strong enough methods, it is believed to be “secure.” However, when we look at an emerging technology like the concept of quantum computing, that perception is challenged.

As with most elements of security, the goal of encryption is not necessarily to create something that can never be broken (with sufficient time and effort, the math that is used in the encryption process can be “broken” to allow for decryption of the data). Rather, the goal is to make the cost (in time and resources) of breaking it prohibitive — ideally it would take longer than the length of time the data is useful, or cost more than the data is worth, to break the encryption… or both.

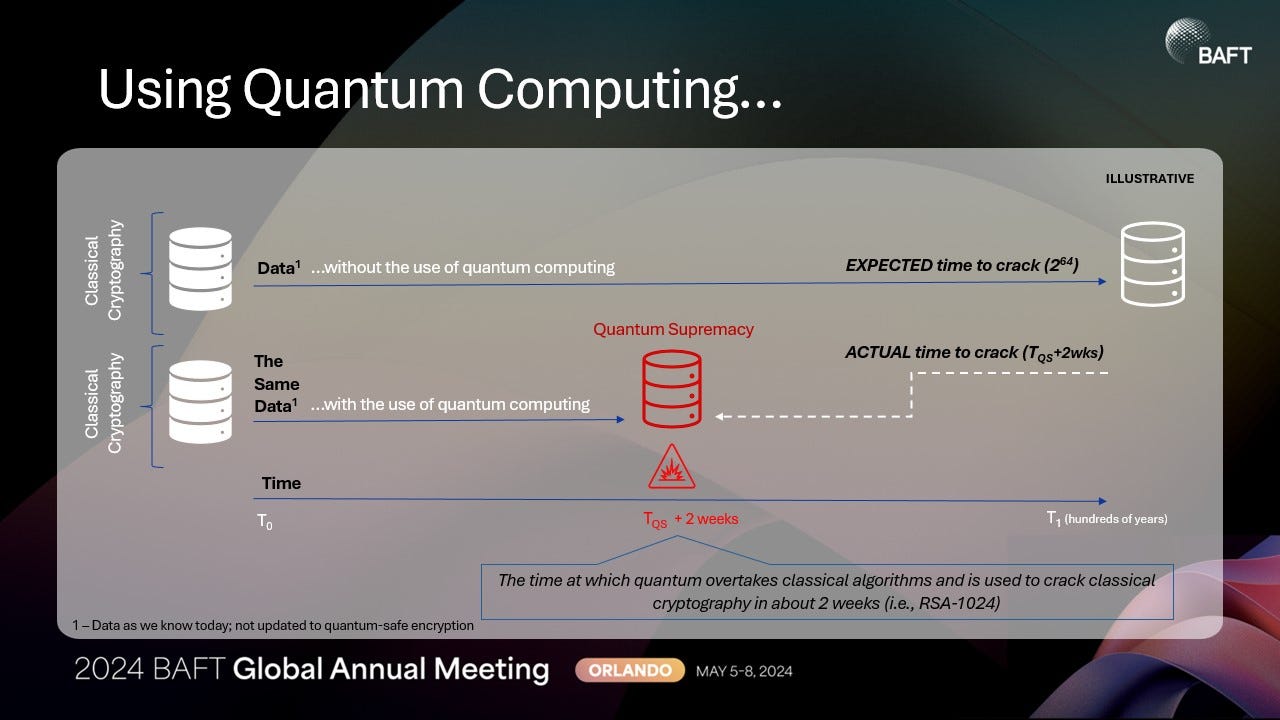

Today, most computers use classical cryptography: traditional algorithms that don’t consider quantum computing. The expected time to crack an encryption through this method is hundreds or thousands of years, even when throwing huge amounts of computing power at them.

However, there exist “quantum algorithms” (methods of solving the math in ways that require probabilistic calculation of the sort quantum computers are good at) developed by mathematicians and cryptographers that would break these algorithms significantly faster.

When a quantum computer exists to execute these algorithms, that time shrinks to weeks — or days!

Lucky for us, we don’t yet live in a world with powerful enough quantum computers that using them to attack this problem is possible. But that won’t always be the case.

We call the point in time when suitably powerful quantum computers are able to overtake the performance of classical (non-quantum) computing Quantum Supremacy.

Once this is reached, cracking an algorithm like RSA-1024 (a common, and currently secure, encryption algorithm) changes from hundreds or thousands of years … to about 14 days.

How will this help gain unauthorized access?

While the math behind quantum computing can be quite interesting — involving linear algebra — the main interest for data access is that quantum algorithms break the assumptions in classical computing. Specifically, quantum computing undermines the expectation that encryption is hard (in time and resources) to overcome.

When it comes to data encryption used for access control, the obvious challenge looming on the horizon lies in data that is transmitted using breakable cryptography after quantum algorithms (used to break that cryptography) are available.

But that belies a larger problem that is already upon us — existing data breaches and data interception happening today.

We trust that encrypting our data means that even if that data is somehow obtained (whether from a filesystem or database at rest, or from messages sent over networks in transit), the data is safe because it can’t be decrypted. But what happens if that obtained data is valuable for a long time — or permanently — and a way to break it comes along in the future?

Such data, using non-quantum safe encryption, can be captured today and cracked later, once quantum computing is available/accessible. If the previously obtained data is still valuable at that point in time (after quantum supremacy), then switching to quantum-safe cryptography in the future won’t protect data stolen now.

So, this reveals at least two attack scenarios for encryption:

- Captured in real time for cracking (after quantum supremacy)

- Captured now and stored for cracking later (at time of quantum supremacy)

How difficult is this (at time of quantum supremacy)?

Not very. Once a computer exists, breaking RSA-1024 is trivial.

A suitably powerful trapped ion quantum computer with sufficient power requires around fourteen days to break RSA-1024 using a quantum algorithm called Shor’s Algorithm.

A classical computing method using the current record-holding algorithm (the General Number Field Sievei123) would take thousands or millions of years depending on how much hardware thrown at it (~4 million years based on our benchmarks for a single-core GNFS using a 2.4ghz i7).

Quantum computing, therefore, becomes very attractive for malicious actors looking to pose old threats — like unauthorized access — to help commit fraud.

So, what do we do about this?

Understand the data that you have, how it’s stored, and its “lifetime” (how long the data has meaning and value). If that data needs to be protected for a long time (like immutable health information or PII, for example), start protecting it now.

This doesn’t require knowledge of quantum algorithms, or how quantum computers work. It just requires us to know our assets, their criticality, and that new algorithms (quantum-safe algorithms, which can’t just be broken using a quantum computer) exist.

Re-encrypting all of our data and modifying our applications to use new cryptography methods isn’t free — but it is manageable.

Ultimately, we do not need to chase the new technology, but rather double-down on the problem space we already have — protecting what is most important.

Cyber Risk: How to handle emerging technology in fraud?

While the technologies addressed here and their use in fraud is new, their attack space is not new at all: targeting identity and processes, data and access. These emerging technologies are simply pointed towards targets that are already known, and hopefully, these targets are already in our list/register of “what to protect.”

Emerging technologies exist, but chasing them isn’t the answer. Instead, double down on what you need to protect.

Understand your problem space — your assets, your applications and workflows, your processes, and how those things can be interacted with and targeted.

What assets are at risk? What are you protecting? If you know what needs protecting and lean just slightly into the ways it may be targeted in the future, you don’t need to chase each new emerging technology.

Need help understanding what assets are critical to your organization? Contact us at Neuvik. We’re happy to help.

About the Authors

Ryan Leirvik

Ryan is the Founder and CEO of Neuvik. He has over two decades of experience in offensive and defensive cyber operations, computer security, and executive communications. His expertise ranges from discovering technical exploits to preparing appropriate information security strategies to defend against them. Ryan is also the author of “Understand, Manage, and Measure Cyber Risk,” former lead of a R&D cybersecurity company, Chief of Staff and Associate Director of Cyber for the US Department of Defense at the Pentagon, cybersecurity strategist with McKinsey & Co, and technologist at IBM.

- Twitter: https://x.com/Leirvik

- LinkedIn: https://www.linkedin.com/in/leirvik/

Tillery

Tillery is Neuvik’s Director of Professional Cybersecurity Training. Tillery has been in formal education and professional training roles for the US Department of Defense as well as for commercial companies for more than a decade. Tillery brings deep technical knowledge and pedagogical training to instruction in cybersecurity, computer science, and mathematics.

- Twitter: https://x.com/AreTillery

- LinkedIn: https://www.linkedin.com/in/aretillery/

About Neuvik

Neuvik is a cybersecurity services company organized into cross-discipline offerings focused on integrating assessments, risk management, and targeted practitioner skill and capability development into comprehensive security solutions.

You can learn more about Neuvik here:

- Website: Neuvik | Cybersecurity Expertise

- Twitter: https://twitter.com/Neuvik

- LinkedIn: https://www.linkedin.com/company/neuvik-solutions/

Footnotes:

¹No fraud was committed during this experiment. Permission from the authorized account holder was provided and properly supervised to complete this transaction.

²The content of the audio was not edited. The audio length was edited for brevity — to keep approx. 2 min in length.